May 19, 2025

Validated Reference Architectures: Streamlining AI Infrastructure Deployment

The NVIDIA Enterprise AI Factory is a full-stack validated design that offers guidance for enterprises to build and deploy their own on-premise AI factory. The NVIDIA's Reference Architectures (RAs), such as the NVIDIA Cloud Partner Reference Architecture (NCPRA) and Enterprise Reference Architecture (ERA), are meticulously crafted blueprints designed to simplify the deployment of high-performance, scalable, and secure AI infrastructure. These architectures provide a standardized framework for constructing AI-powered data centers, often referred to as "AI factories."

It’s designed to support a wide range of AI-enabled enterprise applications, agentic and physical AI workflows, autonomous decision-making, and real-time data analysis. It features expertly designed NVIDIA Blackwell accelerated infrastructure tailored to enterprise needs, integrating specialized AI software to ensure seamless operation and robust performance. And it's validated by NVIDIA IT, tapping into NVIDIA’s engineering know-how and partner ecosystem to help Enterprises achieve time-to-value and mitigate the risks of AI deployment.

This article explores the advantages of NVIDIA Reference Architectures, the motivations behind NVIDIA's promotion of these architectures, and how ASUS integrates with NVIDIA Enterprise AI Factory with the RAs to create cutting-edge AI solutions.

Advantages of NVIDIA RAs

- Optimized Performance:

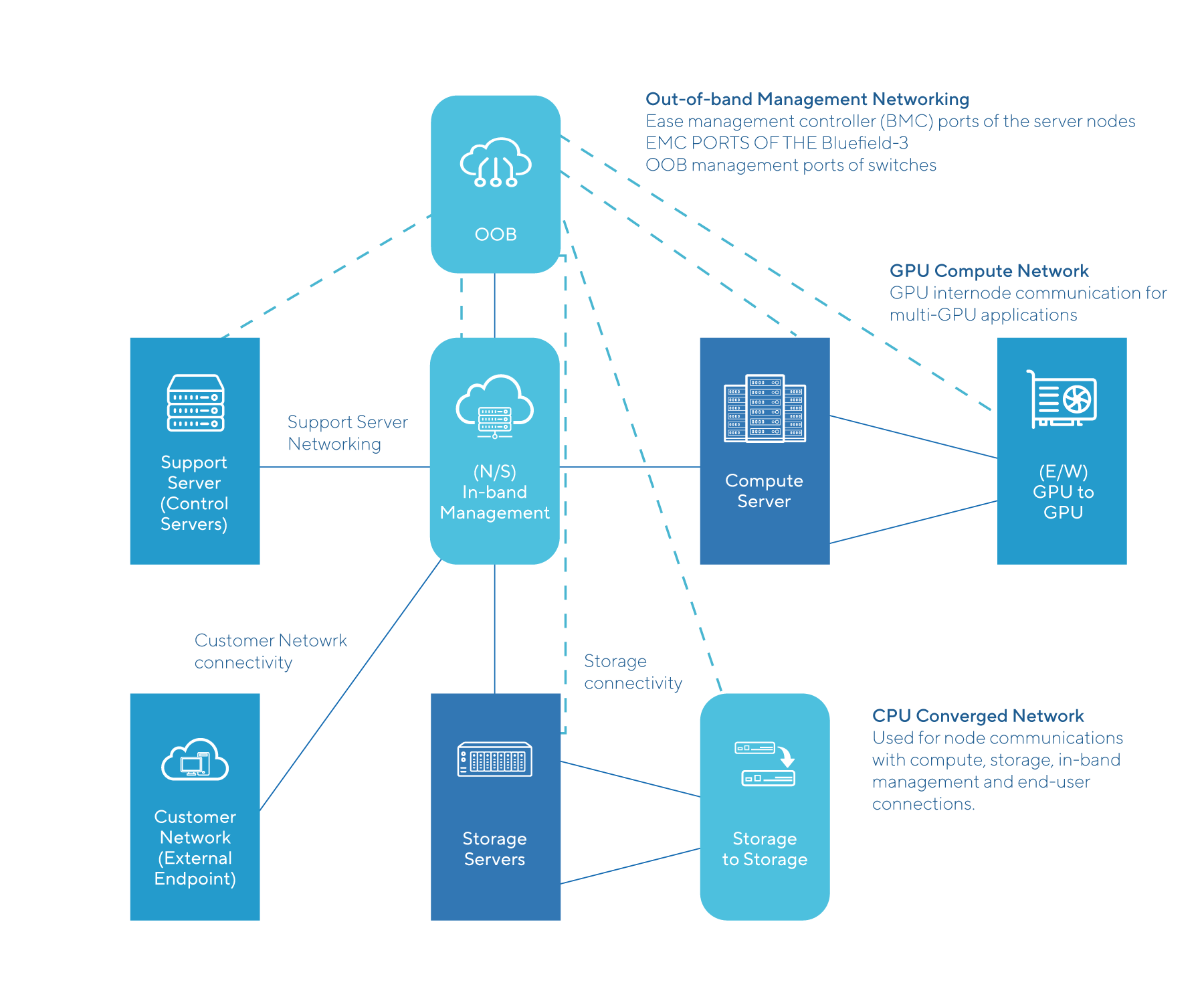

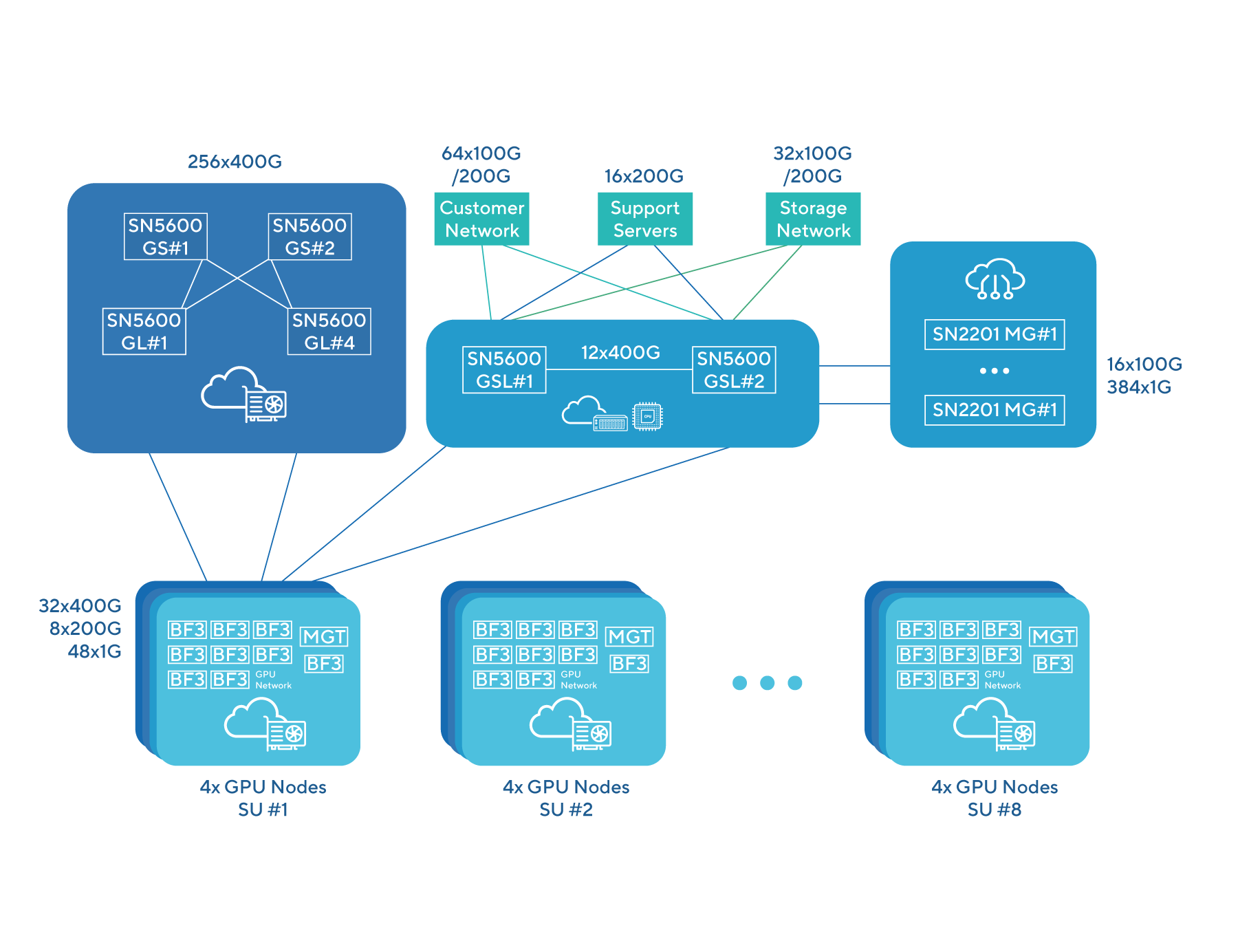

NVIDIA's Reference Architectures are specifically engineered to maximize the performance of NVIDIA's advanced hardware, including GPUs, CPUs, and networking technologies like Spectrum-X Ethernet and BlueField-3 DPUs. For instance, NCPRA is optimized for AI cloud service providers, enabling efficient handling of compute-intensive workloads such as generative AI and large language models (LLMs). Similarly, ERA offers optimized configurations for enterprise-grade AI training and inference, ensuring high throughput and low latency.

- Scalability:

NCPRA and ERA provide flexible design patterns to accommodate workloads of varying scales. For example, ERA supports clusters ranging from 4 to 128 nodes, with configurations including "2-4-3" (2 CPUs, 4 GPUs, 3 NICs) or "2-8-5" (2 CPUs, 8 GPUs, 5 NICs), scaling up to 1,024 GPUs. This scalability allows organizations to start small and incrementally expand their infrastructure as AI demands grow, reducing upfront costs and risks.

- Simplified Deployment:

NVIDIA's Reference Architectures offer pre-validated hardware and software stacks, reducing the complexity of building AI infrastructure from scratch. These architectures include certified server configurations, networking recommendations, and software such as NVIDIA AI Enterprise, streamlining resource provisioning, workload management, and monitoring. This comprehensive approach accelerates the time-to-market for AI solutions.

- Enhanced Security and Reliability:

NVIDIA's Reference Architectures incorporate enterprise-grade security features and undergo rigorous testing to ensure reliability. Certified systems using NVIDIA Grace CPU and NVLink-C2C interconnect provide robust performance for mission-critical applications such as conversational AI and fraud detection. This is particularly important for enterprises transforming traditional data centers into AI factories.

- Cost-Effectiveness:

The standardized designs of NCPRA and ERA optimize resource utilization, reducing energy consumption and operational costs. For example, the Blackwell architecture, often integrated into reference architectures, offers an 8X improvement in energy efficiency for data analytics, compared to traditional CPU-based systems. This efficiency translates into significant cost savings for enterprises and cloud service providers.

Why NVIDIA Promotes Reference Architectures

- Accelerating AI Adoption:

The rapid growth of generative AI and the increasing demand for accelerated computing have driven NVIDIA's promotion of Reference Architectures. By providing standardized blueprints, NVIDIA lowers the barrier to entry for enterprises to deploy AI infrastructure, accelerating AI adoption across industries such as healthcare, finance, and manufacturing.

- Strengthening Ecosystem Collaboration:

NVIDIA collaborates with partners to deliver solutions based on Reference Architectures. By providing pre-validated designs, NVIDIA ensures compatibility and interoperability, enabling partners to build systems based on NVIDIA GPUs, networking, and software, which further solidifies NVIDIA's leadership in the AI ecosystem.

- Meeting AI Factory Market Demands:

The rise of generative AI is transforming data centers into "AI factories" that produce intelligence. NVIDIA's Reference Architectures, particularly ERA, are designed to meet this demand, providing scalable, high-performance infrastructure for training and deploying LLMs and other foundation models. This aligns with NVIDIA's vision of driving the next industrial revolution through AI.

- Reducing Deployment Risks:

Building AI infrastructure from scratch is fraught with challenges, including compatibility issues and performance bottlenecks. NVIDIA's Reference Architectures mitigate these risks by providing tested and certified configurations, ensuring predictable outcomes and reducing deployment failures. This is particularly appealing to organizations transitioning from traditional computing to AI-driven workflows.

ASUS Integration with NVIDIA RAs

ASUS, a leading global provider of computing solutions, deeply integrates NVIDIA's Reference Architectures into its AI infrastructure offerings, leveraging ASUS expertise in hardware design and thermal management to deliver tailored, high-performance solutions.

- NVIDIA-Certified Systems:

ASUS offers a range of NVIDIA-certified servers, including the HGX and ESC8000 series. These servers support advanced GPUs like the NVIDIA RTX PRO 6000 Blackwell Server Edition and H200, ensuring optimal performance for AI inference and training workloads. The modular design of ASUS MGX servers provides flexible configurations, enhancing scalability and return on investment.

- Advanced AI PODs:

At NVIDIA GTC 2025, ASUS introduced its next-generation AI POD, which is based on the NVIDIA GB300 NVL72 platform. This compact, high-density solution adheres to NVIDIA Reference Architecture principles, delivering exceptional processing power for generative AI and data analytics. ASUS AI PODs are designed for enterprise-grade scalability and efficiency, making them ideal for AI factories.

- Omniverse and OVX Integration:

ASUS servers are certified for NVIDIA OVX, a platform for large-scale 3D simulation and digital twin workloads. By supporting NVIDIA's Omniverse ecosystem, ASUS enables industries such as architecture and manufacturing to leverage Reference Architecture-based infrastructure for real-time collaboration and simulation, enhancing productivity and innovation.

Conclusion

NVIDIA's Reference Architectures, including NCPRA and ERA, provide a robust framework for building AI infrastructure, offering optimized performance, scalability, and cost-effectiveness. NVIDIA promotes these architectures to accelerate AI adoption, to strengthen ecosystem collaboration, and to meet the market demand for AI factories. Integrating NVIDIA's Reference Architectures into certified server solutions, AI PODs, and cooling solutions, ASUS delivers scalable, secure, and efficient AI solutions. The collaboration between NVIDIA and ASUS is driving the transformation of data centers into AI powerhouses, paving the way for the next era of computing.