-

Unimageable AI. Unleashed.

Bring your NVIDIA GB200 concept to life, backed by ASUS

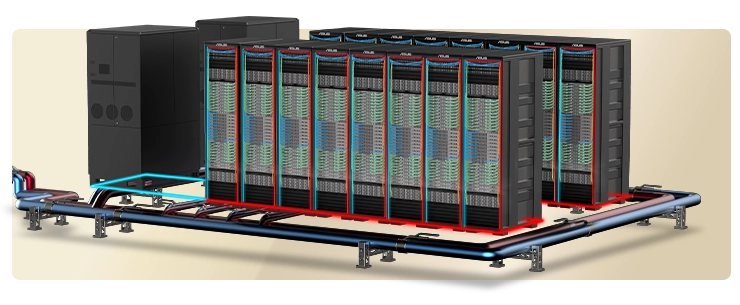

Unlock the full potential of your AI infrastructure with the ASUS AI POD powered by NVIDIA GB200 Grace™ Blackwell Superchips. This supercharged AI system is engineered to accelerate real-time trillion-parameter models like LLM and MoE, providing unparalleled performance for large-scale AI projects, hyperscale data centers and research initiatives. Tailored to enterprises and R&D teams driving innovation, ASUS AI POD empowers you to push the boundaries of what’s possible, all while accelerating your time to market with the ASUS-exclusive software-driven approach.

-

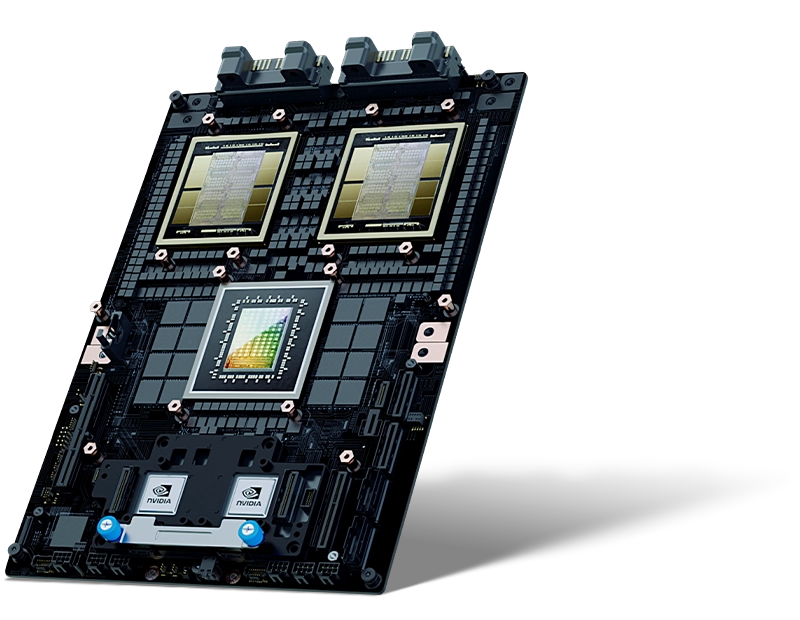

The NVIDIA Blackwell GPU breakthrough

ASUS AI POD contains NVIDIA GB200 Grace™ Blackwell Superchips, each packing 208-billion transistors. NVIDIA Blackwell GPUs feature two reticle-limited dies connected by a 10 terabytes per second (TB/s) chip-to-chip interconnect in a unified single GPU. NVIDIA GB200 NVL72 delivers a computing power of 1,440 PFLOPS in FP4 precision, leveraging its advanced Tensor Cores and fifth-generation NVLink interconnects to achieve high performance for AI workloads.

-

-

1 2 3 4 5 6

1 2 3 4 5 612 x OOB MGMT Switch 1GBe

1 x OS Switch 1GBe (Optional)

1 x RMC (Optional)23 x 1RU Power Shelf 33KW

310x Compute Trays

49x Non-Scalable NVLink

Switch Trays58x Compute Trays

63 x 1RU Power Shelf 33KW

-

1 2

1 211400A Rack Busbar

2NVIDIA Cable Cartridge

-

-

-

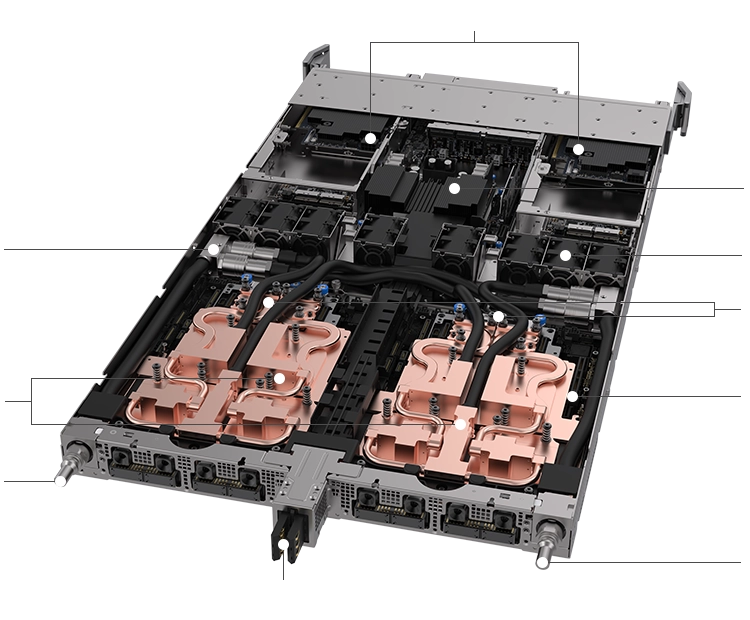

1 1 2 3 4 5 5 6 6 7 8 9 10

1 1 2 3 4 5 5 6 6 7 8 9 101NVIDIA BlueField®-3 DPUs (B3240)

2PDB

3Inner Manifold

4Fan Area

5NVIDIA ConnectX®-7 Mezzanine Network Board

6NVIDIA GB200 Grace™ Blackwell Superchips

7HMC Module

8Liquid Cooling Outlet

9Busbar Clip

10Liquid Cooling Inlet

-

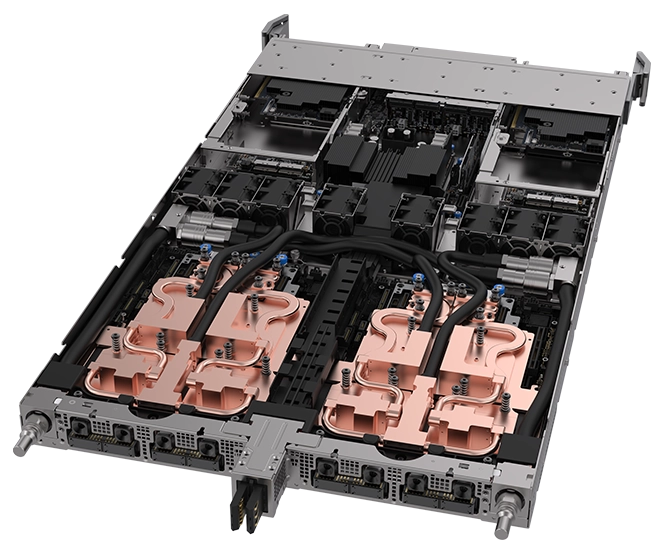

1 2 2 3 4 5 6 7 8 9 9

1 2 2 3 4 5 6 7 8 9 91NVIDIA BlueField®-3 DPUs (B3240)

22 x OSFP 400G

31G PXE

48* E1.S

5BMC

6USB 3.2

7miniDP

8NVIDIA BlueField®-3 DPUs (B3240)

92 x OSFP 400G

-

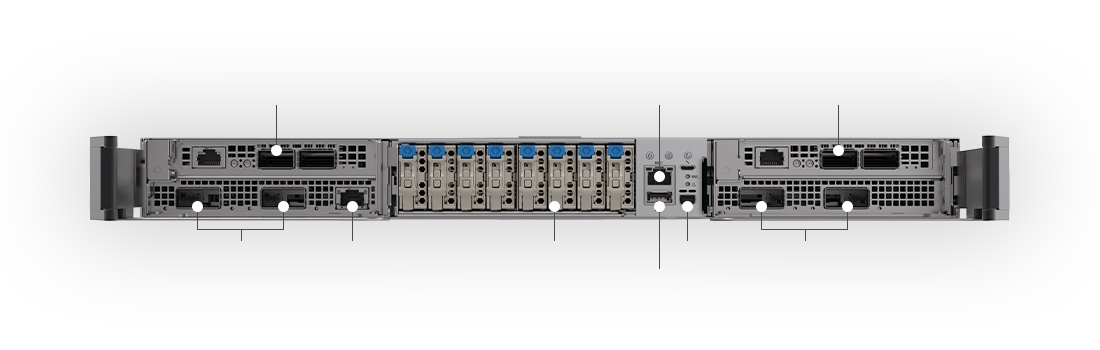

1 2 2 3 4 4 5

1 2 2 3 4 4 51Liquid Cooling Outlet

2NVIDIA® NVLink™Connector

3Busbar Clip

4NVIDIA® NVLink™Connector

5Liquid Cooling Inlet

-

-

-

30XLLM InferenceNVIDIA H100 Tensor Core GPU

-

4XLLM TrainingH100

-

25XEnergy EfficiencyH100

-

18XData ProcessingCPU

- LLM inference and energy efficiency: TTL = 50 milliseconds (ms) real time, FTL = 5s, 32,768 input/1,024 output, NVIDIA HGX™ H100 scaled over InfiniBand (IB) vs. GB200 NVL72, training 1.8T MOE 4096x HGX H100 scaled over IB vs. 456x GB200 NVL72 scaled over IB. Cluster size: 32,768

- A database join and aggregation workload with Snappy / Deflate compression derived from TPC-H Q4 query. Custom query implementations for x86, H100 single GPU and single GPU from GB200 NLV72 vs. Intel Xeon 8480+

- Projected performance subject to change

-

-

-

AI Infrastructure at scale

ASUS continues to demonstrate its expertise in building AI infrastructure by leveraging the powerful NVIDIA GB200 NVL72. This advanced system, housed in a single rack with multiple compute nodes, consumes approximately 120kW of electricity. Given this substantial power requirement, it is crucial for data center operators to reassess and upgrade the existing topology and configuration. Optimization efforts should focus on ensuring stable performance through enhanced heat dissipation, robust networking connections, and the overall architectural integrity of the facility. As AI workloads grow more demanding, these infrastructure improvements are essential for meeting performance and efficiency goals while maintaining operational stability.

-

Maximize efficiency, minimize heat

Liquid-cooling architectures

ASUS, in collaboration with partners, offers comprehensive cabinet-level liquid-cooling solutions. These include CPU/GPU cold plates, cooling distribution units and cooling towers, all designed to minimize power consumption and optimize power-usage effectiveness (PUE) in data centers.

-

Liquid-to-air solutions

Ideal for small-scale data centers with compact facilities.

Designed to meet the needs of existing air-cooled data centers and easily integrate with current infrastructure.

Perfect for enterprises seeking immediate implementation and deployment. -

Liquid-to-liquid solutions

Ideal for large-scale, extensive infrastructure with high workloads.

Provides long-term, low PUE with sustained energy efficiency over time.

Reduces TCO for maximum value and cost-effective operations.

-

-

Brilliantly Fast

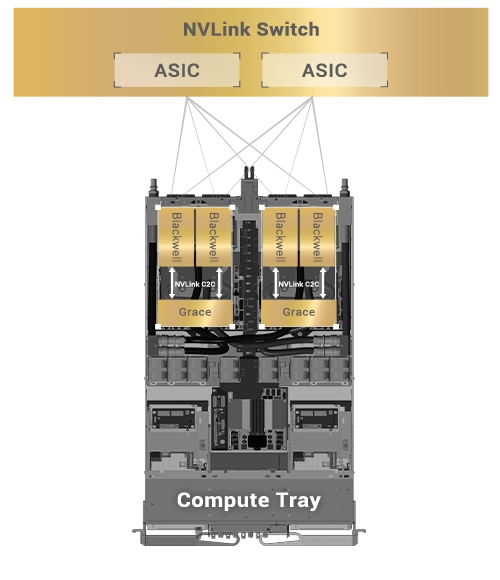

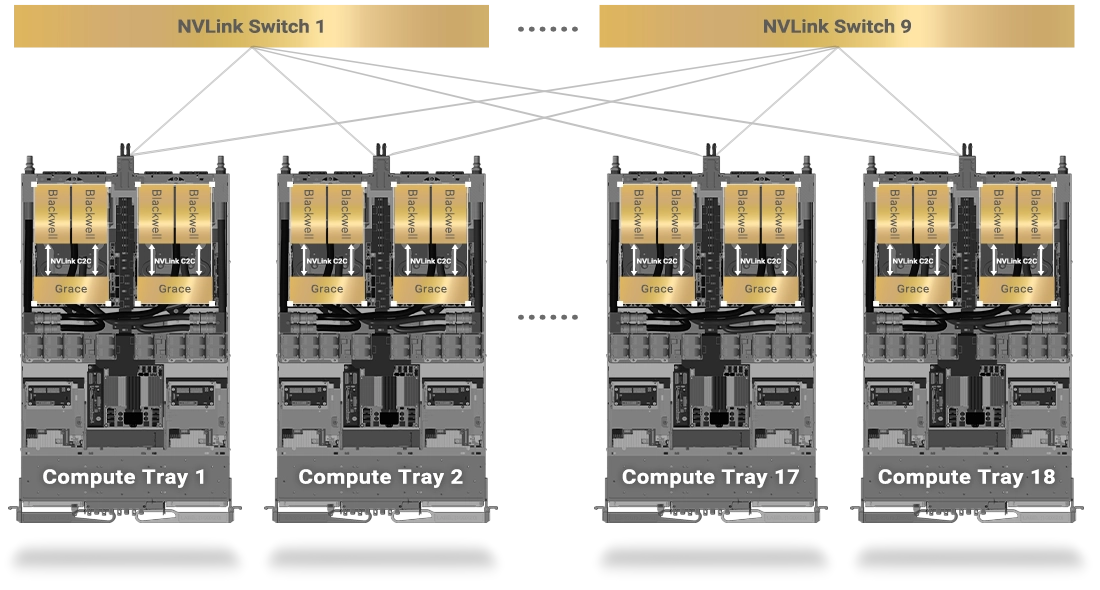

5th-gen NVLink technology in NVIDIA GB200 NVL72

The NVIDIA NVLink Switch features 144 ports with a switching capacity of 14.4 TB/s, allowing nine switches to interconnect with the NVLink ports on each of the 72 NVIDIA Blackwell GPUs within a single NVLink domain.

NVLink connectivity in a single compute tray to ensure direct connect to all GPUs

NVLink connectivity in single rack

-

ASUS Premiere Service Suite

Transforming data centers with full-scale integration, from hardware to applications

ASUS has redefined its approach to infrastructure service architecture by providing a full spectrum of services — from design and construction to implementation, validation, testing and deployment. This is seamlessly integrated with cloud-application services, all delivered with a customized, client-specific service model to meet diverse customer requirements.

-

Architecture Design

-

Large-scale Deployment

-

Configuration Test

-

Customization

-

Cloud Services

-

Project Delivery

-

-

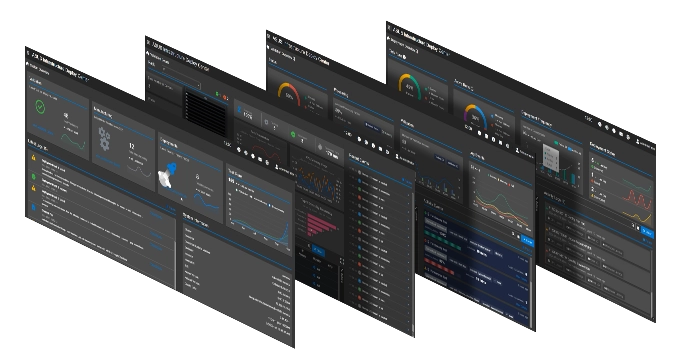

Accelerate your time to market

ASUS self-owned software and controller

The NVIDIA NVLink Switch features 144 ports with a switching capacity of 14.4 TB/s, allowing nine switches to interconnect with the NVLink ports on each of the 72 NVIDIA Blackwell GPUs within a single NVLink domain.

-

ASUS Control Center (ACC)

Centralized IT-management software for monitoring and controlling ASUS servers

- Power Master – Effective energy control for data centers

- Easy search and control of your devices

- Enhance information security easily and quickly

-

ASUS Infrastructure Deployment Center (AIDC)

One management console to realize centralized remote deployment

- Automation and systemization

- Centralized configuration control and management

- Accelerated deployment for rack-scale infrastructure

-

Rack Management Controller (RMC)

A centralized controller for remote hardware management within server a rack

- Troubleshooting, management and maintenance

- Batch monitoring

-