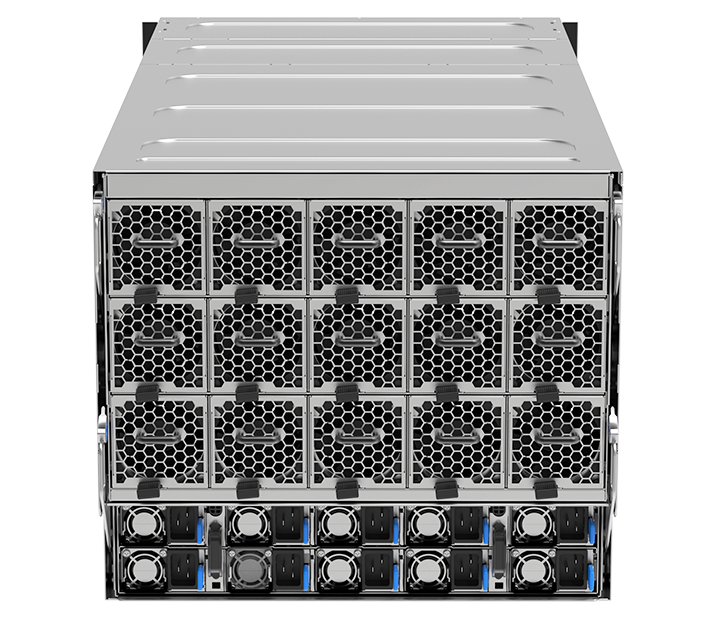

Next-Level 8-GPU Server for Enterprise / CSP heavy AI workloads

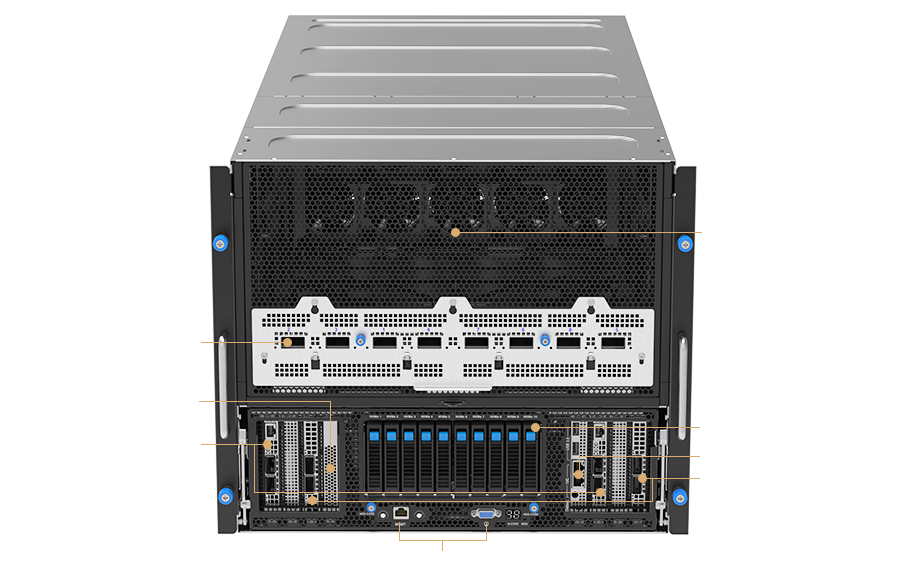

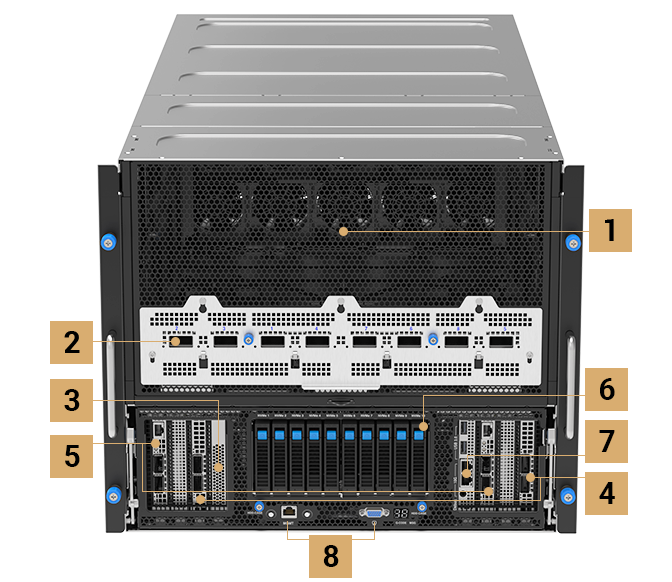

NVIDIA HGX™ B300 8-GPU server

Unmatched End-to-End Accelerated Computing Platform

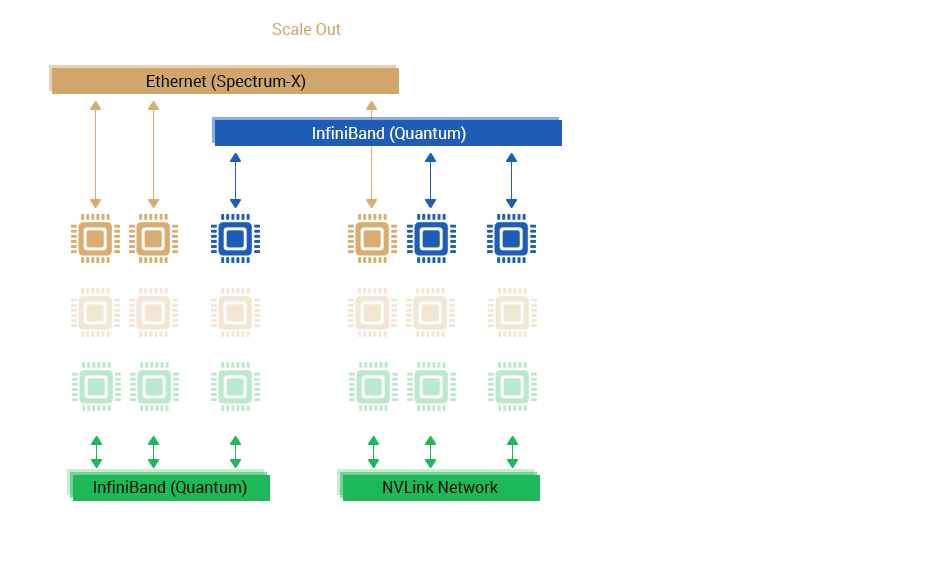

This breakthrough is further enhanced by fifth-generation NVLink with 1.8TB/s GPU-to-GPU interconnect, InfiniBand networking, and NVIDIA Magnum IO™ software, ensuring efficient scalability for enterprises and large-scale GPU computing clusters.

GPU

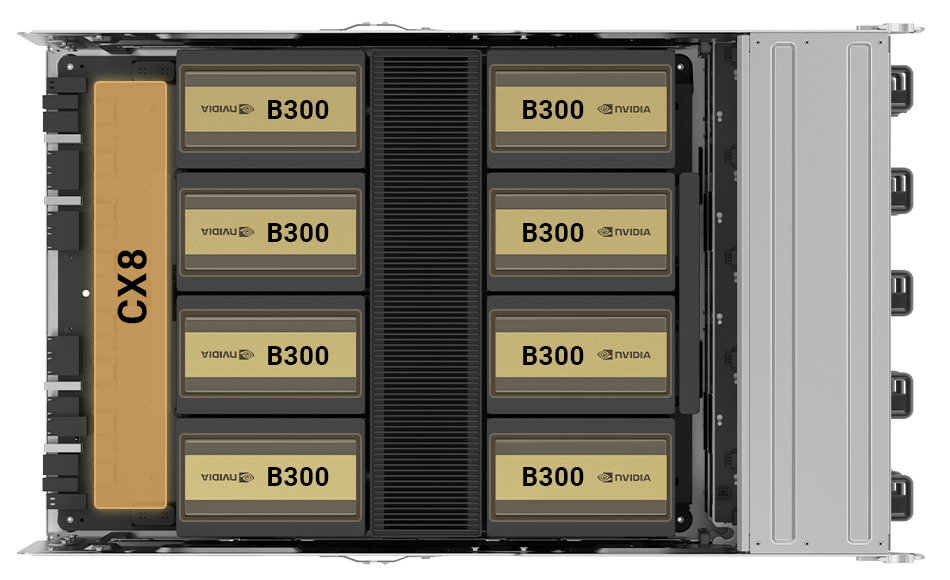

HGX BLACKWELL ULTRA B300

Processor

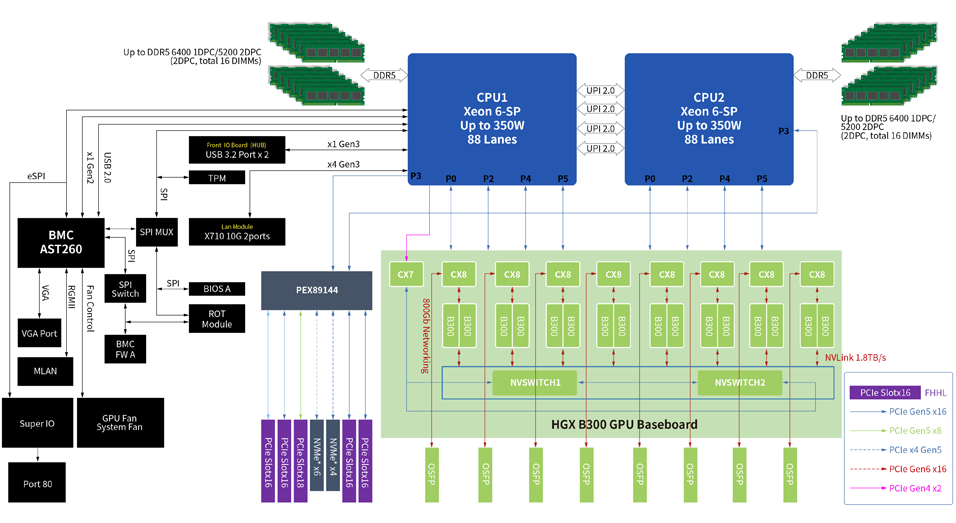

2 × 6th Gen Intel® Xeon® Scalable Processors (SP) TDP 350W

Memory

32 × 6400 DDR5 DIMM slots (Max 4TB)

Expansion

5 × Gen5 PCIe slots (4 × 16 + 1 × 8)

Storage

10 × 2.5" bays (10 × NVMe)

Power Supply

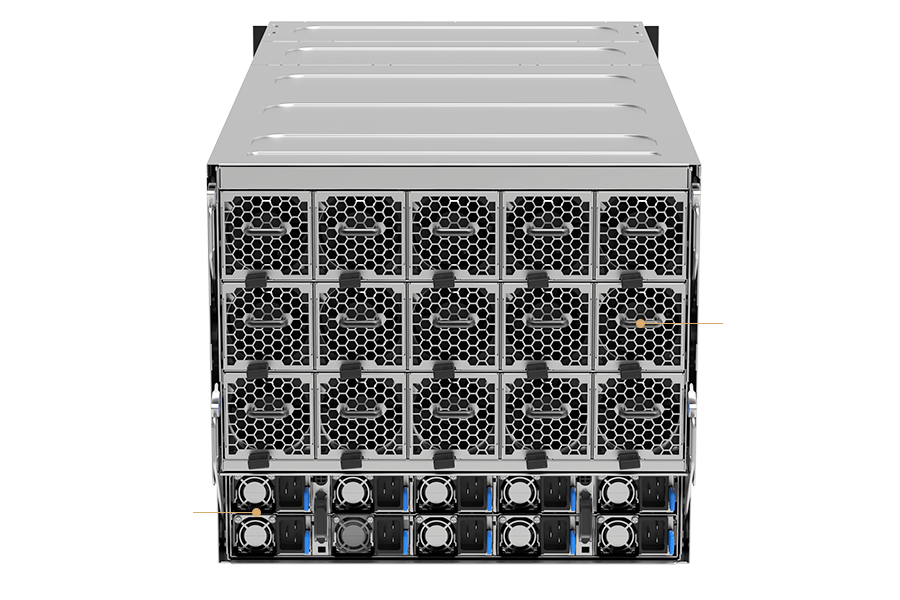

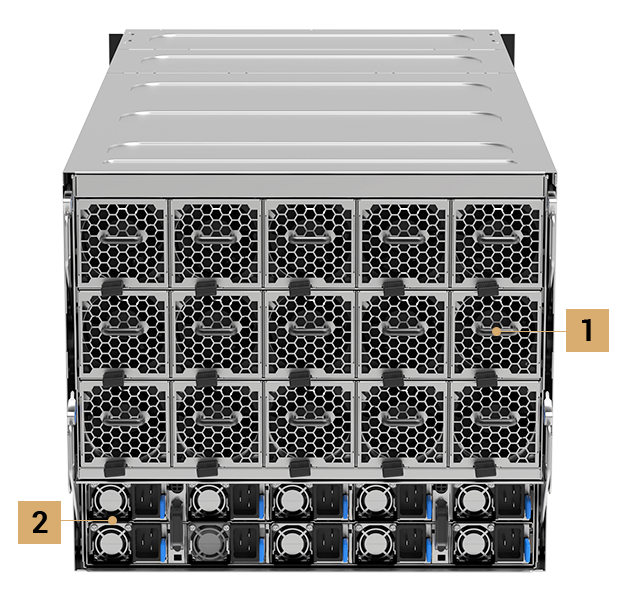

5+5 redundant 3200W 80 PLUS Titanium PSU

GPU Fan x15 (54V, 8080)

PSU x10 (54V, 3200W)

HGX B300

OSFP Connector x8

RAID (X8)

PCIe Slot (x16)

BlueField-3

Storage U.2 x10

FPB

IO Panel

Embedded CX8 Supports XDR/800G per SXM

Ultra-fast, low-latency networking, right on the GPU

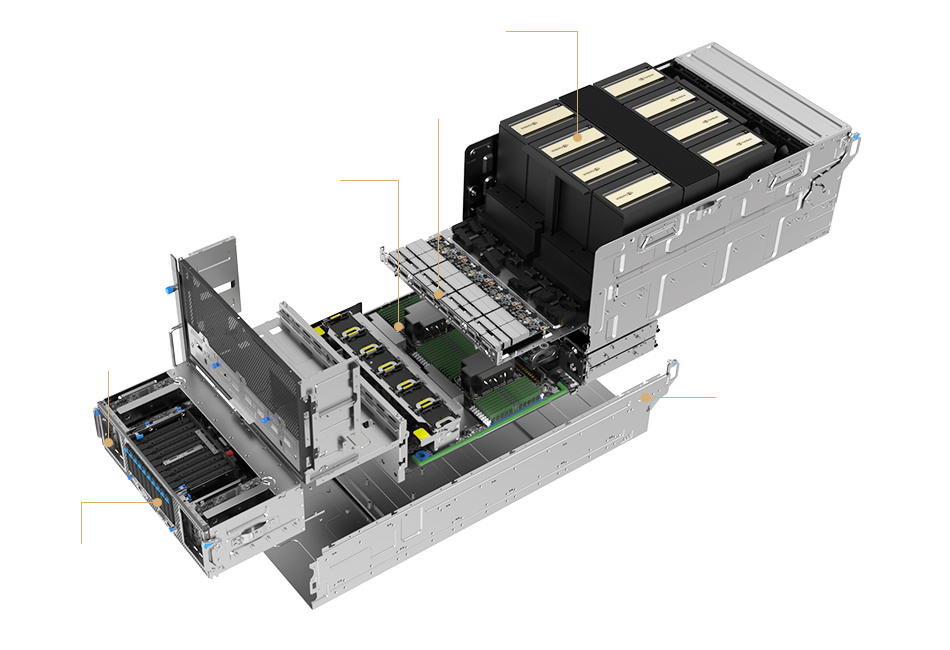

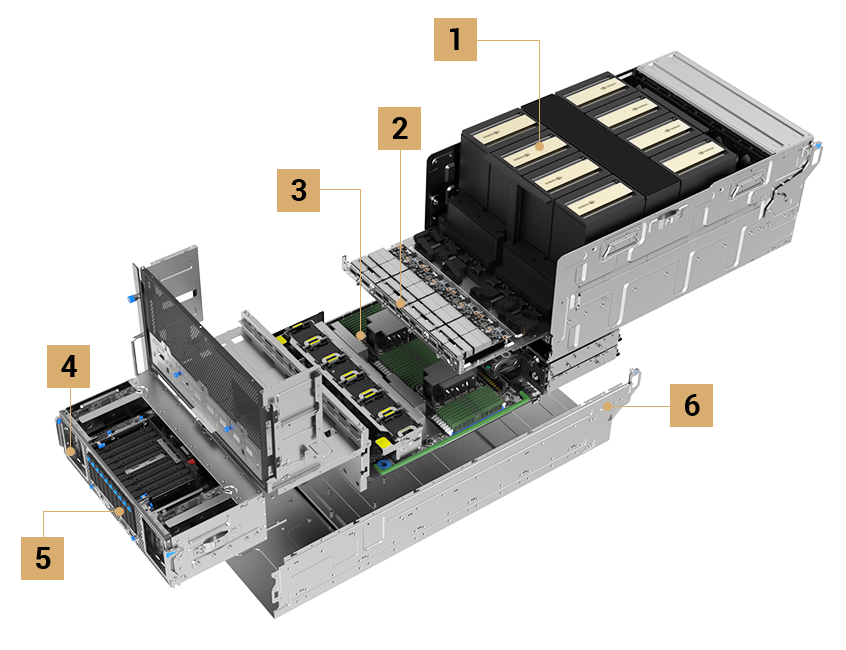

Modular Design with Reduced Cable Usage

Easy troubleshooting and thermal optimization

Advanced NVIDIA Technologies

The full power of NVIDIA GPUs, DPUs, NVLink, NVSwitch, and networking

Sustainability

Keep your data center green

Optimized Thermal Design

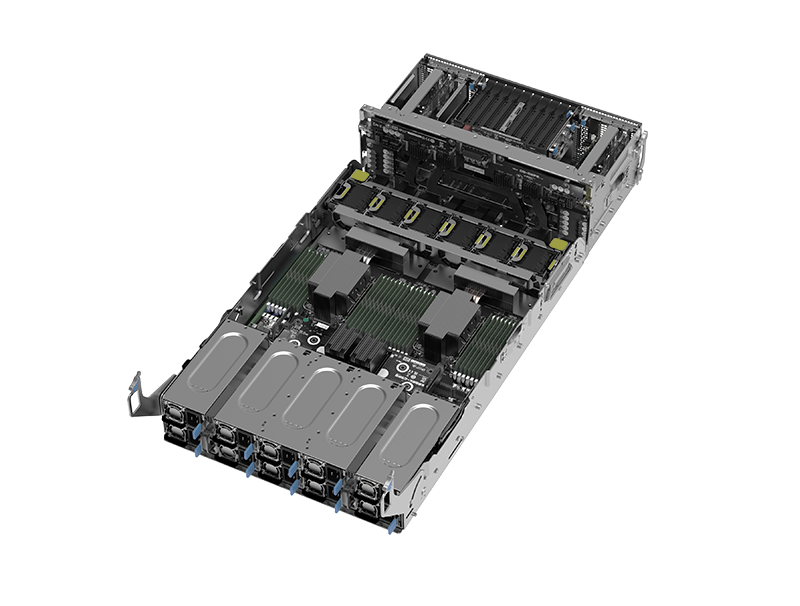

A two-level GPU and CPU sled for thermal efficiency

5+5 Power Supplies

Exceptional power efficiency

Serviceability

Improved IT operations efficiency

-

Ergonomic handle design

-

Tool-free thumbscrews

-

Riser-card catch

-

Tool-free cover

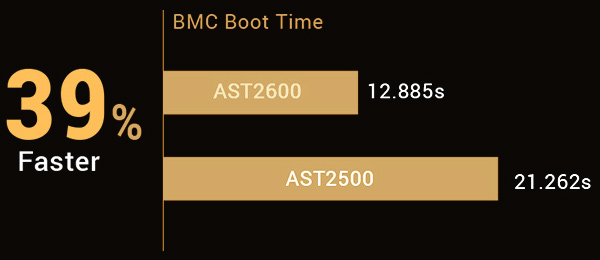

Management

Comprehensive IT infrastructure solutions

BMC

Remote server management

IT infrastructure-management software

Streamline IT operations via a single dashboard

Hardware Root-of-Trust Solution

Detect, recover, boot and protect

* Platform Firmware Resilience (PFR) module must be specified at time of purchase and is factory-fitted. It is not for sale separately.

Trusted Platform Module 2.0

Intel Xeon 6 processors

-

What AI workloads are the XA NB3I-E12 for?What AI workloads are the XA NB3I-E12 for? XA NB3I-E12 with NVIDIA Blackwell HGX B300 8-GPU and dual Intel Xeon 6 processors handles LLMs, AI training, and scientific computing with 8 embedded CX8 InfiniBand for ultra-low latency.

-

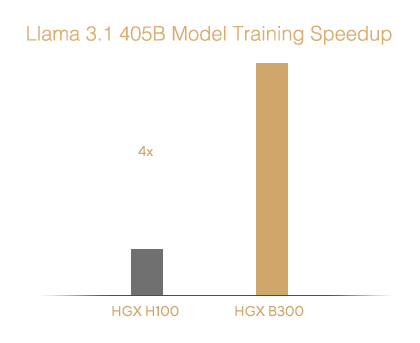

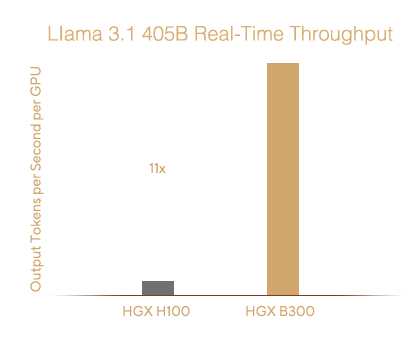

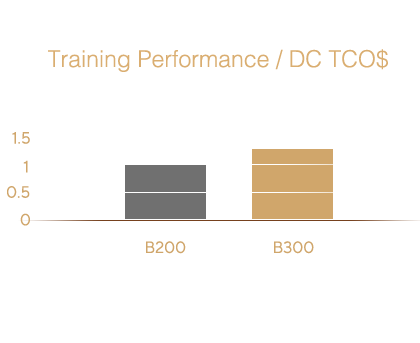

What performance does XA NB3I-E12 deliver?What performance does XA NB3I-E12 deliver? NVLink and NVSwitch provide 18 TB/s GPU bandwidth. Second-gen Transformer Engine and FP8 enable up to 4X faster training and 11X higher inference for LLMs.

-

What makes XA NB3I-E12 energy efficient?How is XA NB3I-E12 energy efficient? Modular design and two-level GPU/CPU airflow reduce heat. 5+5 80 PLUS Titanium power supplies ensure reliable, efficient power.

-

How is XA NB3I-E12 easy to manage?How is XA NB3I-E12 managed easily? Supports ASUS ASMB12-iKVM, ASUS Control Center, PFR, and TPM 2.0. Tool-free design makes maintenance fast and simple.

-

What network and storage do XA NB3I-E12 support?What network and storage do XA NB3I-E12 support? 8 embedded CX8 InfiniBand (800G per SXM), 5 expansion PCIe slots, 32 DIMMs, 10 NVMe drives, and dual 10Gb LAN for high-performance AI workloads.