Built for the Era of AI Reasoning

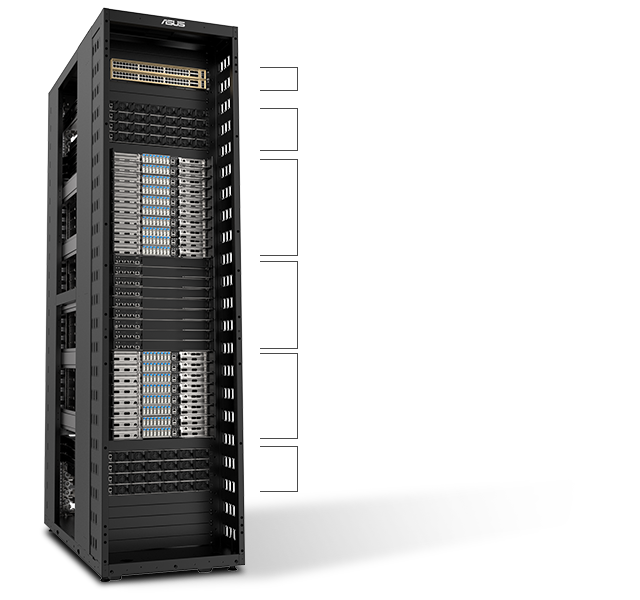

ASUS AI POD with NVIDIA GB300 NVL72

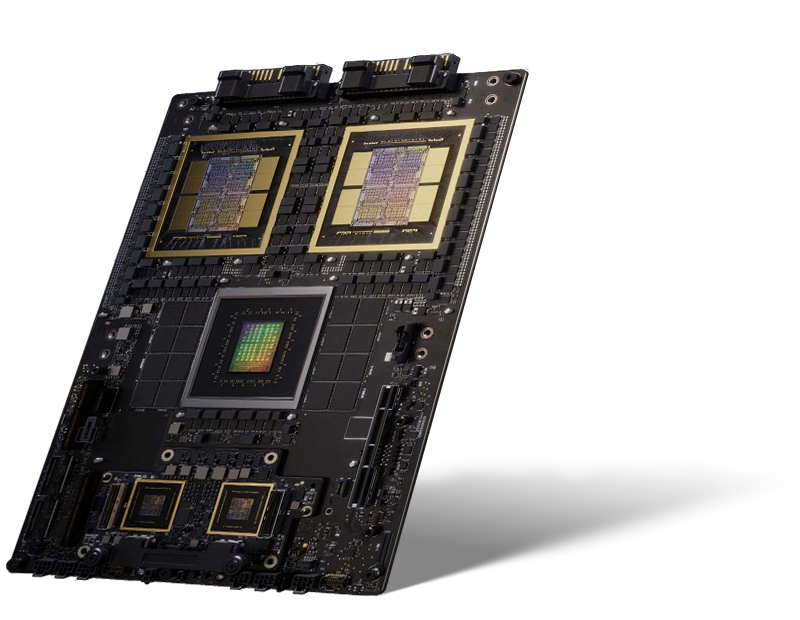

The NVIDIA Blackwell Ultra GPU Breakthrough

-

-

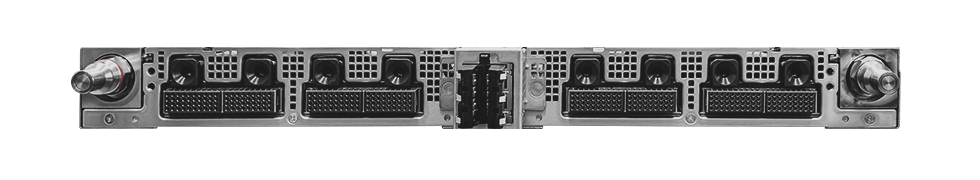

2 x OOB MGMT Switch 1GBe

1 x OS Switch 1GBe (Optional)3 or 4 x 1RU Power Shelf 33KW

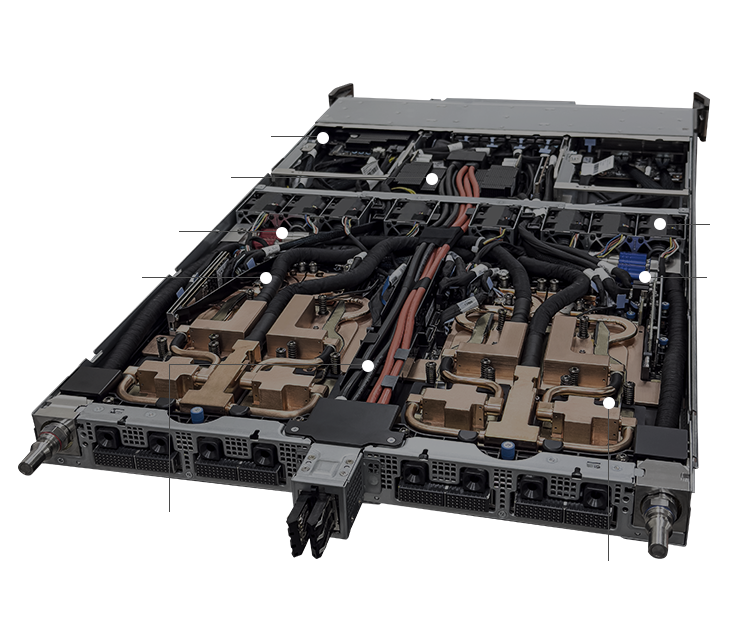

10 x Compute Trays

• 4 x NVIDIA Blackwell Ultra GPUs per tray

• 2 x NVIDIA Grace GPUs per tray9 x NVLink Switch Trays

• Interconnected 72 x GPUs with 1,800 GB/s, 5th Gen NVLink8 x Compute Trays

• 4 x NVIDIA Blackwell Ultra GPUs per tray

• 2 x NVIDIA Grace CPUs per tray3 or 4 x 1RU Power Shelf 33KW

-

Fifth-generation NVIDIA NVLink™

NVIDIA Quantum-X800 InfiniBand /

NVIDIA Spectrum-X™ Ethernet

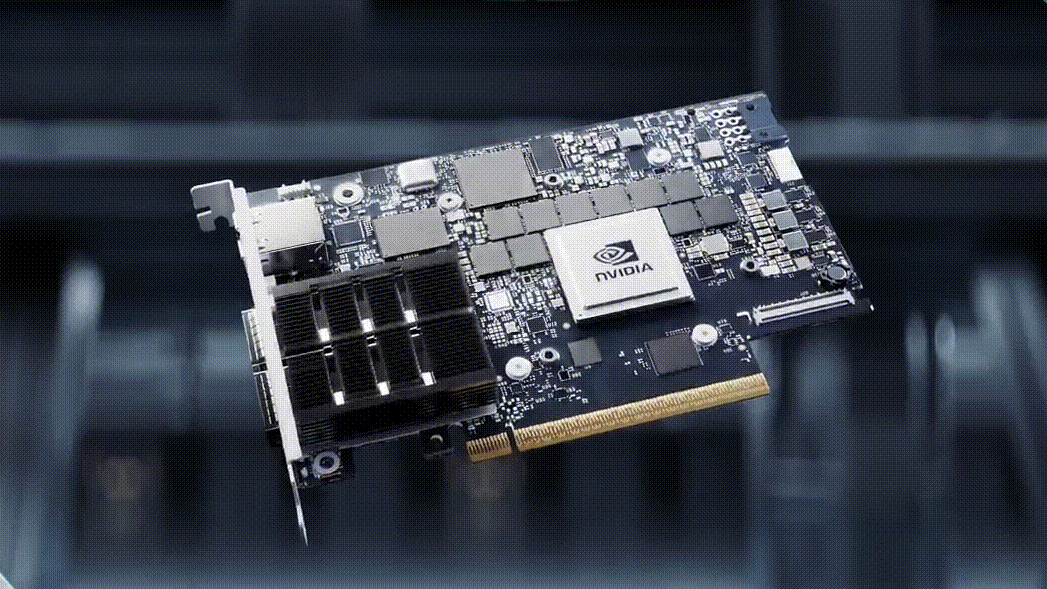

NVIDIA® BlueField®-3

Total Solution to Speed Time to Market

Storage Solutions Optimized for Workloads

Maximize efficiency, minimize heat

Liquid-cooling architectures

-

Liquid-to-air solutions

Ideal for small-scale data centers with compact facilities.

Designed to meet the needs of existing air-cooled data centers and easily integrate with current infrastructure.

Perfect for enterprises seeking immediate implementation and deployment. -

Liquid-to-liquid solutions

Ideal for large-scale, extensive infrastructure with high workloads.

Provides long-term, low PUE with sustained energy efficiency over time.

Reduces TCO for maximum value and cost-effective operations.

Validated Topologies

for Scalable AI Infrastructure

-

Predictable Performance

Assured bandwidth and low latency for demanding AI workloads

-

Simplified Scaling

Validated designs ensure smooth growth from rack to cluster

-

Deployment Efficiency

Reference architectures accelerate setup and reduce complexity

Accelerate your time to market

ASUS self-owned software and controller

-

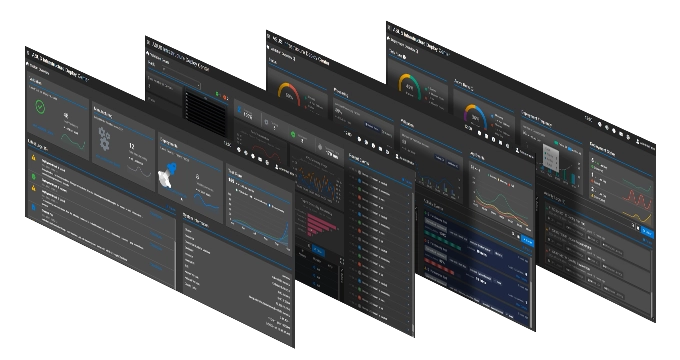

ASUS Control Center (ACC)

Centralized IT-management software for monitoring and controlling ASUS servers

- Power Master – Effective energy control for data centers

- Easy search and control of your devices

- Enhance information security easily and quickly

-

What is the ASUS AI POD with NVIDIA GB300 NVL72?What is the ASUS AI POD with NVIDIA GB300 NVL72? The ASUS AI POD with NVIDIA GB300 NVL72 is a rack-scale AI infrastructure solution that integrates 72 NVIDIA Blackwell Ultra GPUs and 36 NVIDIA Grace CPUs to power large-scale LLM inference, training, and AI reasoning.

-

How does the NVIDIA GB300 NVL72 in ASUS AI POD improve AI performance?How does the NVIDIA GB300 NVL72 in ASUS AI POD improve AI performance? The NVIDIA GB300 NVL72 leverages 5th-gen NVIDIA NVLink™ and ConnectX-8 SuperNICs with Quantum-X800 InfiniBand or Spectrum-X™ Ethernet to deliver seamless GPU communication, low latency, and high-bandwidth data flow – accelerating training and inference.

-

What cooling options are available for the ASUS AI POD with NVIDIA GB300 NVL72?What cooling options are available for the ASUS AI POD with NVIDIA GB300 NVL72? The ASUS AI POD with NVIDIA GB300 NVL72 supports both liquid-to-air and liquid-to-liquid cooling solutions, ensuring efficient heat dissipation and stable performance under the heaviest AI workloads.

-

Is the ASUS AI POD with NVIDIA GB300 NVL72 designed for data centers and enterprise AI?Is the ASUS AI POD with NVIDIA GB300 NVL72 designed for data centers and enterprise AI? Yes. The NVIDIA GB300 NVL72-based ASUS AI POD is optimized for enterprise AI, hyperscale data centers, cloud service providers and research institutions, delivering scalable performance for trillion-parameter LLMs, MoE, and other demanding AI applications.

-

What services are included with the ASUS AI POD featuring NVIDIA GB300 NVL72?What services are included with the ASUS AI POD featuring NVIDIA GB300 NVL72? Beyond hardware, the ASUS Professional Services includes software integration, storage solutions, network topology design, cooling expertise, and deployment services – helping organizations accelerate time to market.