Propelling the Data Center Into a New Era of Accelerated Computing

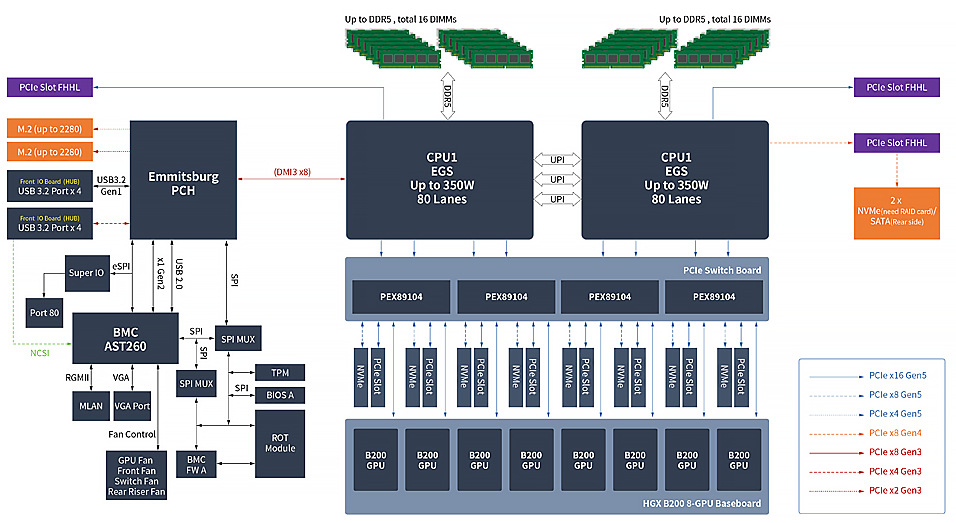

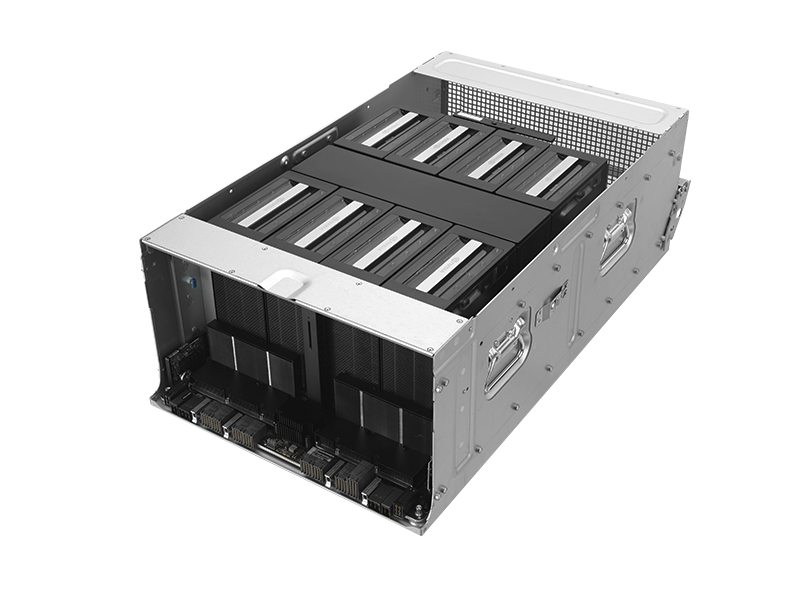

NVIDIA Blackwell HGX™ B200

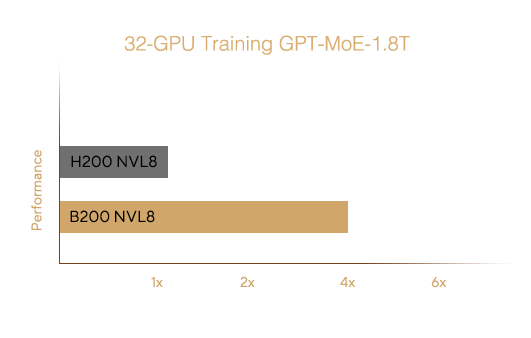

Training at Scale

Inference at Scale

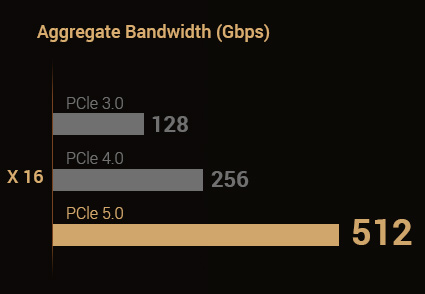

PCIe 5.0 Ready

Faster storage, graphics and networking capabilities

1,800 GB/s bandwidth

Direct GPU-to-GPU interconnect via NVLink

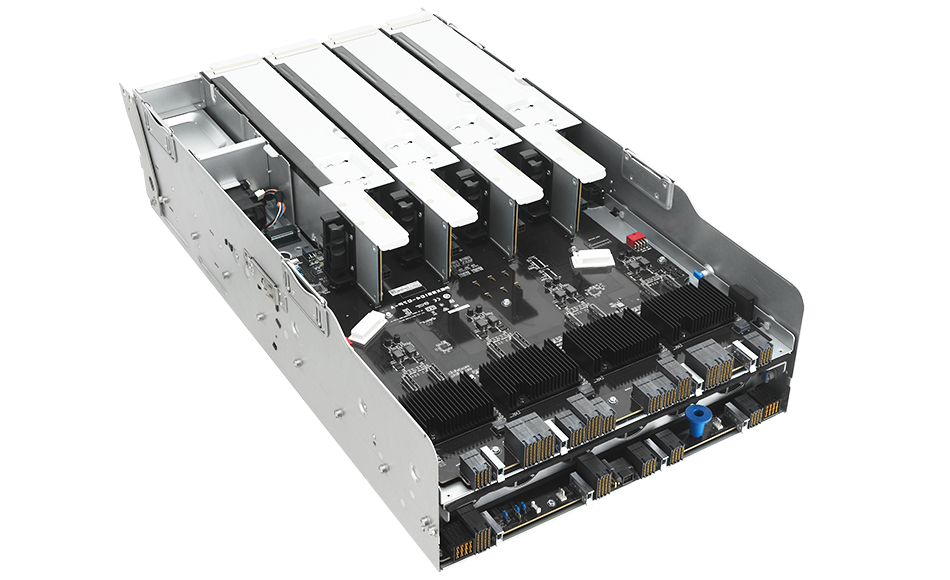

PCIe 5.0 switch board to deliver faster

connection between storage, graphics and NIC

10 NVMe storage

- 8 on front panel

- 2 on rear panel

Modular and toolless design

with sled design and handles

Independent airflow tunnel design

With dual rotor fan modules

GPU Sled Fan 8080 x 15pcs

NVMe x 8

NVMe x 2

1 x PCIE Gen4 x 8

8 x PCIe Gen5 x16 (LP)

2 x PCIE Gen5 x 16

PSU x 6

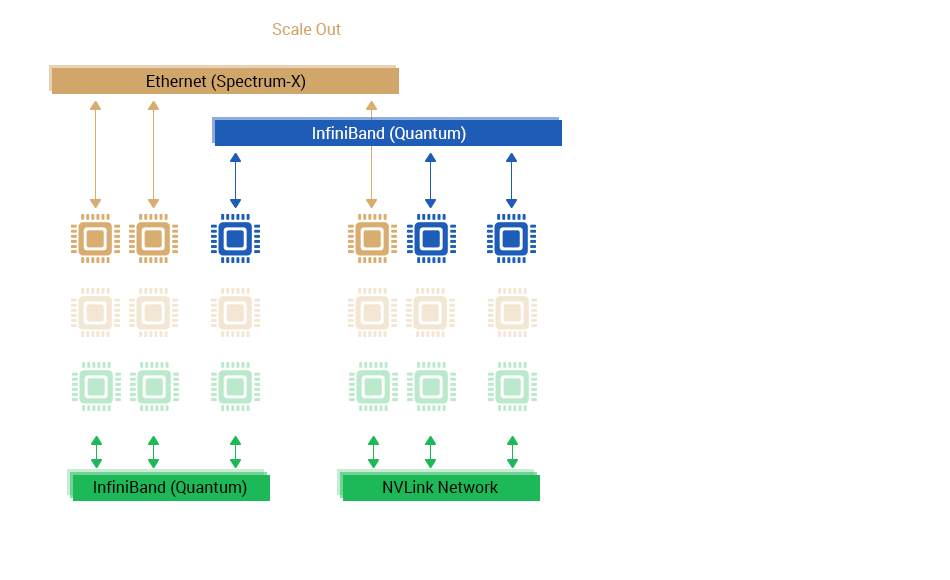

One-GPU-to-one-NIC Topology

Supports eight NICs and eight GPUs in one system

Modular Design with Reduced Cable Usage

Easy troubleshooting and thermal optimization

Advanced NVIDIA Technologies

The full power of NVIDIA GPUs, DPUs, NVLink, NVSwitch, and networking

Sustainability

Keep your data center green

Optimized Thermal Design

A two-level GPU and CPU sled for thermal efficiency

5+1 Power Supplies

High level of power efficiency

Serviceability

Improved IT operations efficiency

-

Ergonomic handle design

-

Tool-free thumbscrews

-

Riser-card catch

-

Tool-free cover

Management

Comprehensive IT infrastructure solutions

BMC

Remote server management

IT infrastructure-management software

Streamline IT operations via a single dashboard

Hardware Root-of-Trust Solution

Detect, recover, boot and protect

* Platform Firmware Resilience (PFR) module must be specified at time of purchase and is factory-fitted. It is not for sale separately.

Trusted Platform Module 2.0

Performance

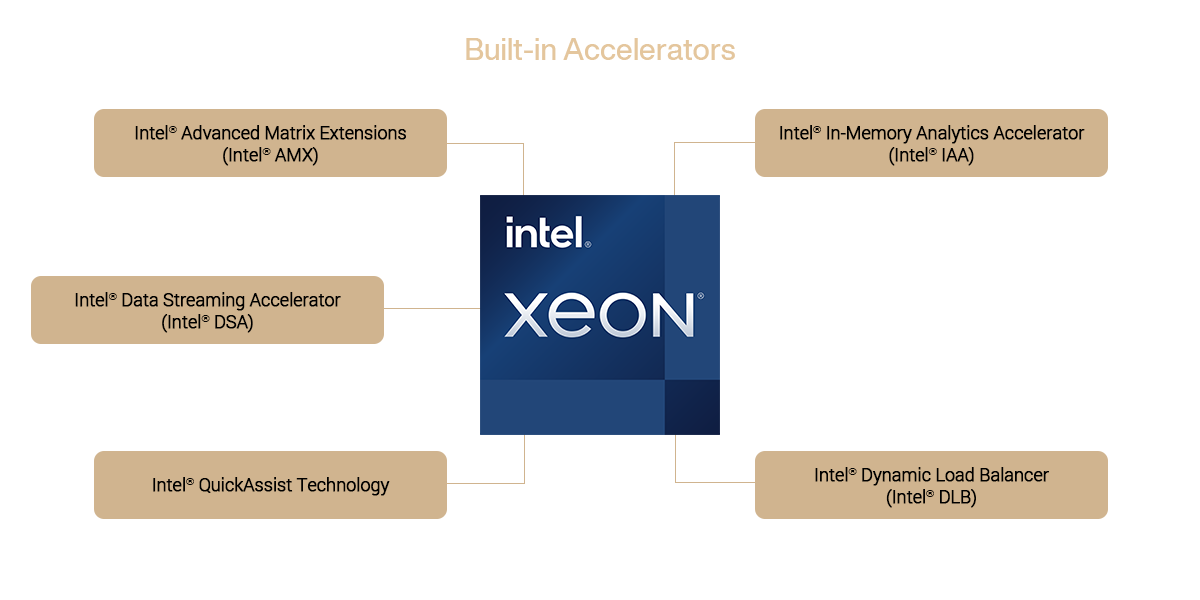

5th Gen Intel® Xeon® Scalable Processors

* Availability of accelerators varies depending on SKU.